Cursor Security: Key Risks, Protections & Best Practices

Over the past year, I have witnessed AI-assisted coding tools transition from experimental add-ons to integral components of the modern development workflow. Among them, Cursor has quickly stood out for its ability to embed large language models directly into the IDE, making code generation, refactoring, and problem-solving faster than ever. That speed and convenience, however, come with a trade-off that many teams overlook.

By combining agent-driven commands, automated workflows, and deep integration with package ecosystems, Cursor creates a development environment that is both highly capable and more exposed to security threats than traditional editors. Prompt injection, malicious code execution, and supply chain attacks have already shifted from theoretical concerns to documented realities in active development environments, a phenomenon I have encountered firsthand while working with teams to investigate and remediate AI-driven security incidents.

Key Takeaways

- Cursor security is essential for any team adopting the platform, as its AI-assisted features expand both productivity and the potential attack surface.

- Risks such as prompt injection, malicious code execution, and supply chain attacks have already shifted from theoretical to documented realities in real-world development environments.

- Features like auto-run workflows, agent-driven commands, and deep package ecosystem integration can be exploited if not managed with strict policies.

- Regularly reviewing vulnerability reports, monitoring the GitHub security page, and applying security advisories helps address critical incidents before they escalate.

- Security teams should combine technical controls with developer training to reduce the chance of exposing sensitive information or introducing unsafe dependencies.

- Using Reco’s real-time threat detection and code-level insights helps organizations identify and block Cursor-specific threats before they impact production.

What is Cursor and Why Cursor Security Matters

Cursor is an AI-powered code editor built on Visual Studio Code that integrates large language models into the IDE to accelerate coding through automation, intelligent suggestions, and agent-driven actions. It allows developers to generate, refactor, and execute code directly from natural language prompts, often without leaving the editor. Its deep integration with automated workflows and external tools means that security is not just a feature consideration but a core requirement for safe adoption.

AI-Assisted IDE Built with Agentic Coding

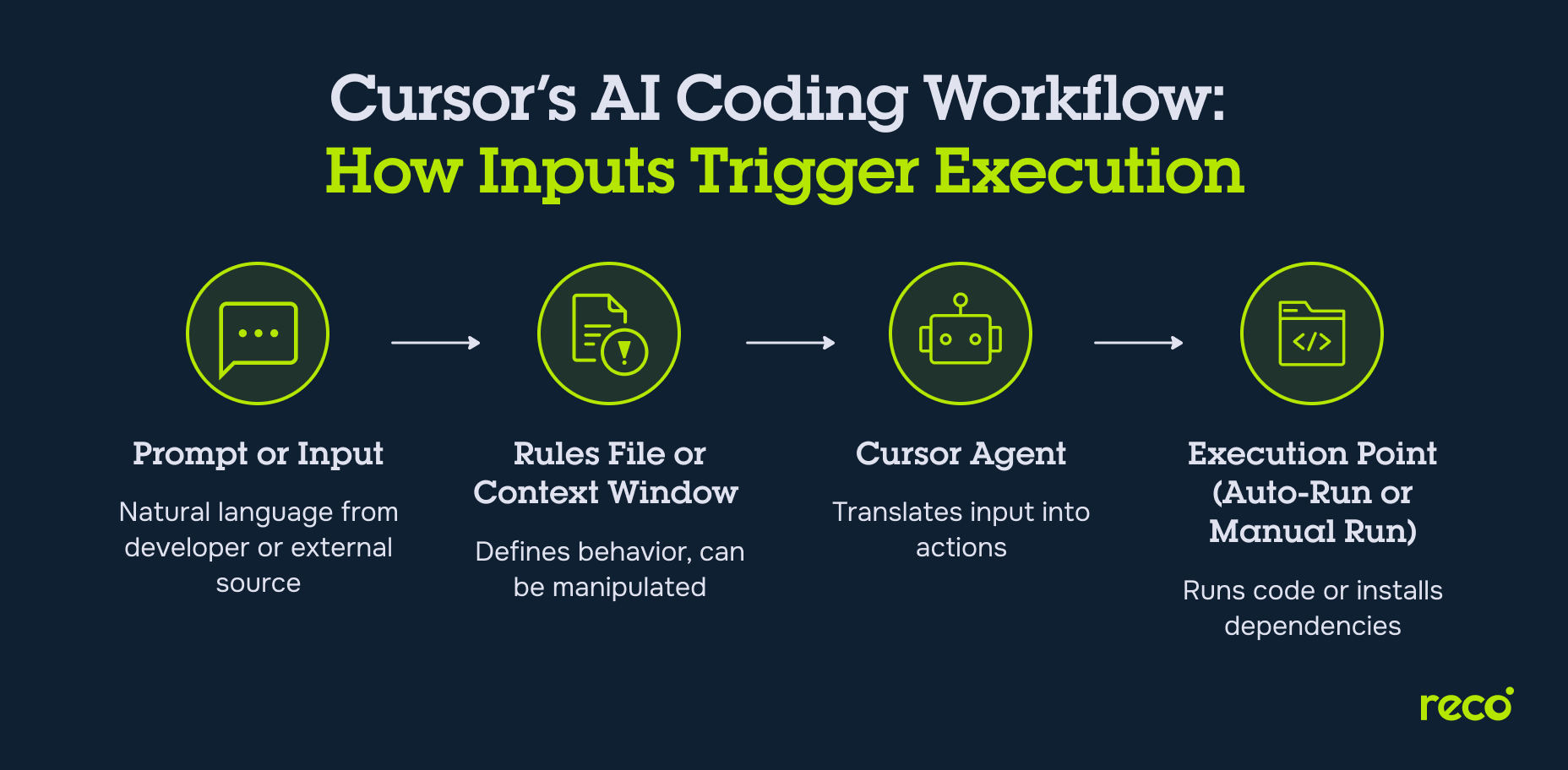

Cursor functions as an agentic coding environment where AI models can take actions beyond code suggestions. They can run commands, install dependencies, and alter files based on project context and user prompts. This accelerates delivery but can also execute harmful instructions if those prompts come from untrusted sources.

Auto-Run Workflows, LLMs, and AI Command Execution

Cursor supports workflows that allow large language models to run commands automatically. These can be triggered by user prompts or by external sources such as Model Context Protocol (MCP) servers. Vulnerabilities like the CurXecute exploit have shown that poisoned data can rewrite configurations and run attacker-controlled code without warning.

Developer Productivity vs. Expanded Attack Surface

By reducing repetitive work and integrating package suggestions, Cursor boosts productivity. However, each integration point, from MCP connections to package registries, creates additional opportunities for malicious code injection, credential theft, and supply chain attacks. For example, researchers have documented cases where AI-suggested dependencies included typo-squatted packages carrying malicious code. Balancing these benefits with strong security controls is critical for safe adoption.

How Cursor Works

Cursor combines AI-driven automation with deep IDE integration, giving developers speed and flexibility. These same capabilities also influence its security profile, making it important to understand the underlying mechanisms:

- Rules, Files, and Behavior Definitions: Cursor uses project-specific rules files to define coding patterns, agent behavior, and restrictions. While this ensures consistent output, malicious or poorly written rules can manipulate the AI into introducing insecure logic or exposing sensitive information.

- Auto-Run Mode and Deny List Mechanisms: Cursor can automatically execute AI-generated commands without manual review, streamlining repetitive tasks. If the deny list is incomplete or outdated, this mode could trigger unauthorized operations or fetch malicious code from untrusted sources.

- Agent Integration with IDE and MCP-Like Protocols: Cursor agents interact directly with the IDE and external resources, following a model similar to the Model Context Protocol. This allows seamless AI assistance, but also means attackers could exploit protocol handling flaws to gain access to source code or inject malicious payloads.

7 Core Cursor Security Risks

While Cursor’s integration of large language models and automated agent workflows can accelerate development, it also opens distinct attack paths that require careful oversight. The following risks represent the most pressing threats confirmed by research and observed in real-world development environments.

1. Prompt Injection and Command Execution

Attackers can craft malicious prompts that instruct Cursor’s AI to run unintended commands or alter critical project files. Because these actions may occur directly within the integrated terminal or file system, exploitation can lead to code manipulation or complete environment compromise.

2. Context Poisoning Across Projects

Cursor maintains conversational and code context to improve AI suggestions. If that context is polluted in one project - for example, with misleading code snippets or instructions - it can carry over into unrelated work, causing logic corruption, introducing security flaws, or leaking sensitive data.

3. Hidden Payloads in Rules Files

Rules files define how agents behave, including automation triggers and execution parameters. A compromised rules file can contain hidden payloads, granting an attacker persistent access or the ability to run arbitrary code whenever the file is loaded.

4. Agent Auto-Runs Without Oversight

Auto-run mode allows agents to execute commands without direct human approval. While this can improve speed, it also removes a key review checkpoint, creating an opportunity for unsafe commands or scripts to execute unnoticed, potentially introducing malicious code or compromising the development environment.

5. Token or Credential Leaks

If AI outputs inadvertently contain authentication tokens, API keys, or login credentials, these secrets can be exposed to logs, version control systems, or even unauthorized collaborators. Stolen credentials may allow attackers to gain direct access to systems and sensitive data.

6. Malicious NPM Package Execution

Because Cursor can install dependencies as part of its AI-driven workflows, it may fetch malicious packages from public registries. These packages can include obfuscated scripts designed to exfiltrate data, deploy malware, or run remote code upon installation.

7. Namespace Collisions and Agent Spoofing

If two agents share the same namespace, a malicious actor can register a spoofed agent that mimics a trusted one, effectively hijacking Cursor’s agent. This can trick the system into running unauthorized commands or leaking confidential data to the attacker-controlled process.

Security Best Practices for Cursor Security

Strengthening security in Cursor requires both proactive configuration and ongoing oversight. The following measures reduce exposure while preserving the productivity gains of AI-assisted development:

- Disable or Restrict Auto-Run Commands: Limit or fully disable automated command execution to prevent unauthorized changes or malicious actions from running without review.

- Validate All Prompt and Rules File Inputs: Review and approve any prompt or rules file before use to detect hidden instructions or malicious payloads.

- Sanitize Context Before AI Agent Use: Remove sensitive code fragments, credentials, and proprietary logic from agent context windows to reduce unintended data exposure.

- Vet NPM Packages Before Execution: Check package sources, maintainers, and recent update histories to avoid executing code from compromised or malicious libraries.

- Monitor Logs and Generated Code Paths: Continuously review command logs and file changes to identify unexpected activity or unauthorized modifications.

- Update to Latest Cursor Releases (v1.3+): Keep Cursor updated to ensure the latest security patches, denial mechanisms, and configuration enhancements are applied. Many of these measures align with broader principles found in a cloud security checklist, helping teams adopt consistent protections across all development environments.

Real-World Cursor Attack Scenarios

While many Cursor security issues are theoretical in other AI coding tools, these scenarios reflect credible, technically feasible attack chains that researchers and practitioners have already explored or simulated. The following table summarizes each case, the method of compromise, and its potential impact on a development workflow.

Security Gaps in Cursor’s Architecture

Cursor’s deep AI integration delivers impressive productivity gains but also introduces structural weaknesses that attackers can exploit. These gaps are rooted in how the IDE handles inputs, execution permissions, context integrity, and operational visibility.

Weak Input Sanitization for Agent Commands

Cursor agents can interpret and execute natural language instructions without strict sanitization or whitelisting. This leaves room for prompt injection attacks where malicious instructions are hidden inside otherwise legitimate prompts, resulting in unintended shell commands or code changes.

Unrestricted Auto-Run Execution

The auto-run mode allows AI-generated code or commands to be executed without manual review. Without enforced confirmation prompts or execution limits, this feature can enable rapid exploitation if a malicious payload is generated or imported from a compromised source.

Lack of Context Validation for External Files

When rules files, configuration data, or code snippets are imported, Cursor does not consistently verify the origin or integrity of these files. This absence of context validation increases the risk of hidden payloads being executed in the background as part of normal development activity.

Minimal Telemetry on Agent Actions

Cursor provides limited visibility into what an agent has executed, modified, or accessed during a session. Without detailed telemetry and logging, malicious actions may go unnoticed until significant damage has already occurred, making incident response far more difficult.

How Reco Protects Cursor with Proactive Threat Detection and Code-Level Insights

Cursor’s AI-driven coding features demand security controls that can match their speed and complexity. Reco delivers targeted protection by combining real-time threat detection with deep visibility into AI-generated code.

- Real-Time Detection of Prompt Injection: Reco identifies and blocks malicious prompt patterns before they can trigger harmful code execution or system changes.

- Context-Aware Execution Policy Enforcement: Applies adaptive security policies that prevent unauthorized auto-run commands or risky workflows based on project context.

- Alerts on Unusual Tool and Code Behavior: Flags abnormal agent actions, suspicious package imports, and unexpected code paths.

- Audit Trails for Agent and Rules Activity: Records detailed histories of agent actions, rules file edits, and execution sequences for investigations and compliance checks.

- SaaS-Wide Visibility into Cursor-Generated Code: Reco centralizes oversight across all connected SaaS platforms to identify and respond to risks introduced through AI-generated code, strengthening the organization’s overall SaaS security posture.

What’s Next: Future Threats Targeting Cursor

As Cursor adoption grows, attackers are likely to evolve their tactics. The threats below represent realistic, research-backed possibilities that build on patterns already observed in AI-assisted development tools.

AI Worms via Prompt & Web Exploit Chains

An AI worm could propagate through prompts and embedded web content, executing actions on multiple developer systems in sequence. If combined with command execution, it could spread via shared repositories or automated workflows.

Indirect Injection from Embedded Content

Malicious payloads could be hidden in README files, documentation, or markdown-rendered HTML. Once parsed into the context window, they could trigger unauthorized commands without the user realizing the origin.

Context Poisoning Using Media, Not Just Text

Attackers could embed malicious instructions in image metadata, audio transcripts, or video captions. When these files are processed by Cursor’s AI agents, they could alter execution flow or inject hidden operations.

Supply Chain Attacks with Signed Rule Abuse

Even signed or “trusted” rules files could be weaponized if attackers compromise a package maintainer’s account. This would bypass many current verification checks, giving malicious code a path into production environments.

Conclusion

Cursor represents a powerful shift in how developers interact with AI-assisted coding, but its advanced automation and agent-driven workflows introduce a security model unlike traditional IDEs. Understanding the risks, both present and emerging, is important for development and security teams aiming to prevent exploitation before it happens. By applying rigorous input validation, restricting automated execution, and maintaining continuous oversight, organizations can balance the productivity benefits of Cursor with a strong, adaptive defense strategy. As AI-driven development evolves, staying ahead of new attack patterns will be necessary to ensure Cursor remains an asset rather than an entry point for adversaries.

What is Cursor Hijacking?

Cursor hijacking occurs when a malicious actor takes control of the IDE’s AI agent or execution pipeline, allowing them to run commands, modify files, or change settings without the developer’s consent. This can happen through spoofed agents, poisoned rules files, or prompt injection chains that escalate into persistent control. Once hijacked, the attacker can manipulate code, steal credentials, or insert backdoors that survive across projects.

What is Prompt Injection in the Cursor and Why is It Dangerous?

Prompt injection in the Cursor is the manipulation of natural language inputs to trigger unintended actions within the AI-assisted IDE. Attackers embed harmful instructions inside what appears to be benign text, leading the AI to execute commands, alter files, or install unsafe packages. The danger lies in the fact that these actions can occur directly within the development environment, often without obvious signs to the user, enabling rapid compromise of code and systems.

How Can Rules Files Introduce Persistent Backdoors?

Rules files in Cursor define agent behavior, automation triggers, and execution permissions. If an attacker modifies these files to include hidden payloads, the malicious logic is executed each time the file is loaded, effectively creating a persistent backdoor. Because rules are often shared across projects or teams, a single compromised file can spread the attack widely before detection.

Can AI Agents in Cursor Leak Tokens or Secrets?

Yes. If AI agents generate output that includes authentication tokens, API keys, or other sensitive credentials, those secrets can be exposed in logs, commit histories, or shared code snippets. Even a single leaked token can enable attackers to access private repositories, cloud resources, or production systems, making secret management and output review critical.

How Can Enterprises Safely Adopt Cursor with Security Controls?

Enterprises can adopt Cursor securely by combining technical safeguards with governance practices:

- Disable or restrict auto-run features to ensure all AI-generated commands are reviewed before execution.

- Implement strict allowlists for dependencies and package sources.

- Sanitize the project context before passing it to AI agents to prevent data leakage.

- Enforce rules, file integrity checks, and maintain version-controlled configurations.

- Continuously monitor command logs, file changes, and agent activity.

How Does Reco Monitor and Mitigate Cursor-Specific Threats?

Reco integrates with Cursor to provide real-time detection, contextual policy enforcement, and deep visibility into AI-driven actions:

- Blocks known prompt injection patterns before they execute.

- Prevents unauthorized auto-run commands through adaptive policies.

- Flags unusual package imports, code paths, and tool usage.

- Maintains audit trails of agent actions and rules file modifications for forensic analysis.

- Centralizes oversight of Cursor-generated code across the organization’s SaaS ecosystem to catch threats before they spread.

Gal Nakash

ABOUT THE AUTHOR

Gal is the Cofounder & CPO of Reco. Gal is a former Lieutenant Colonel in the Israeli Prime Minister's Office. He is a tech enthusiast, with a background of Security Researcher and Hacker. Gal has led teams in multiple cybersecurity areas with an expertise in the human element.

%201.svg)